Publishings

Program Areas

-

October 30, 2025Andrew FergusonChairFederal Trade Commission600 Pennsylvania Ave NWWashington, DC 20580Re. Call for FTC Oversight, Investigation, and Suspension of Meta’s AI Chatbot Advertising PracticeDear Chair Ferguson, Commissioner Holyoak, and Commissioner Meador:We, the undersigned 36 organizations dedicated to privacy, data justice, civil rights, children’s rights, consumer protection, and research, write to demand immediate action in response to Meta Platforms, Inc.’s October 1, 2025, announcement that, effective December 16, 2025, it will begin using user interactions with AI chatbots (voice and text) to personalize advertising and content.[1]This unprecedented use of deeply sensitive data presents outsized risks to consumer data privacy and security, and is at odds with the FTC’s 2020 Order secured against Facebook (now Meta). It also underscores the urgent need for enforcement of Section 5 of the FTC Act against unfair and deceptive AI practices. The danger of regulatory inaction at this critical moment, when generative AI is driving an unprecedented expansion of commercial surveillance, could be catastrophic and irreversible.Meta’s Expanding Reliance on Generative AI for Surveillance MarketingThe Commission should recognize that Meta’s decision to monetize AI-driven chatbots without even a basic opt-out mechanism reflects the company’s broader strategy: an aggressive expansion of AI for marketing and advertising. More than half of all U.S. internet users—163 million people—are projected to use generative AI by 2029, making it one of the fastest-adopted technologies in modern history, outpacing both personal computers and the internet in their early phases.[2] Roughly one third of Americans under 30 already engage with AI several times daily, and it is projected that by 2029, more than 55 million Gen Z users (ages 18–34) will rely on these tools.[3] Meta is at the center of this transformation. Its platforms, Facebook and Instagram, remain dominant among U.S. internet users, and its advertising models are increasingly driven by AI systems such as Advantage+ shopping and ad services, “audience optimization,” automated creative testing, video personalization, conversational search, and virtual influencers.[4] WARC recently described Meta’s AI ad system as enabling marketers to leverage “the richest possible signals to train AI with,” underscoring that the company’s marketing innovations depend on harvesting ever greater amounts of user data.[5]Meta’s harvesting of AI chatbot interactions for advertising must be seen in this broader context. It is not a marginal feature but rather part of a deliberate strategy to normalize a fundamental expansion of surveillance-driven and behavior-changing marketing. The company’s AI-optimized formats are explicitly designed to drive “actionable” reach by shaping how people spend their money, time, and attention.[6] Analysts have already suggested that Meta’s chatbot could “dramatically boost engagement” by initiating unprompted conversations based on prior interactions.[7] Even eMarketer, an industry research group, cautioned in response to Meta’s October 1, 2025 announcement that “guardrails on ad placement within chatbot conversations need to be tight to prevent what could be perceived as exploitive targeting.”[8] These developments carry serious consequences for all users—especially youth—by embedding invasive AI data practices into daily online interactions without meaningful safeguards. They also underscore why the Commission must exercise its oversight authority under the consent decree and, if necessary, pursue enforcement under Section 5 of the FTC Act to ensure Meta’s practices are lawful, transparent, and subject to appropriate safeguards.Consent Decree Obligations Require FTC Oversight and InvestigationThe FTC’s 2020 Order[9] against Meta (formerly Facebook) imposes clear obligations on Meta, and the FTC has both authority and a responsibility to conduct oversight to ensure these obligations are being met. It is crucial that the FTC exercise this oversight authority here.Parts VII and VIII of the 2020 Order require that Meta, among other things:Conduct a detailed product review or data privacy and security risk assessment for each new or modified product, service, or practice that collects or uses consumer data;Document and implement safeguards to protect consumer privacy in response to any risks identified;Retain records for FTC or independent assessor review; andObtain an independent biennial assessment of its program and produce a Quarterly Privacy Review Report, which must be furnished to the Commission upon request.Meta’s proposed use of conversational AI data for advertising and content personalization is plainly a “new or modified” practice under this framework. Conversational data generated through AI chat interactions is substantially more sensitive than ordinary behavioral data, as it may reveal personal relationships, mental health concerns, political views, and other intimate information.[10] Accordingly, Meta must conduct a comprehensive privacy and security risk assessment of this practice, and that assessment must be subject to formal FTC oversight, review, and documentation.Furthermore, under the modified order, Meta is prohibited from misrepresenting the extent to which it maintains the privacy or confidentiality of user information.[11] While Meta announced the launch of generative AI features across its apps on September 27, 2023, it did not disclose until October 1, 2025, that it would collect and use AI-chat interactions for content and ad personalization and targeting.[12] That announcement was followed by an email to users with only a brief update to the Privacy Policy: “We’ll start using your interactions with AIs to personalize your experiences and ads.” Most users do not follow corporate blogs, and failing to clearly address that AI-chat interactions are covered, or to provide meaningful choices such as an opt-in, suggests an effort to obscure the risks of this practice. This lack of transparency requires investigation under the consent decree’s provisions. Without clear and forthright disclosure, the public has no assurance that Meta’s practices comply with its obligations or that the safeguards envisioned by the decree are functioning as intended.We call on the Commission to exercise its oversight authority under the consent decree and publicly disclose its findings.The FTC Must Finalize the 2023 Proposed Modifications to the 2020 OrderWe are also deeply concerned that the Commission has stayed proceedings on the 2023 proposed modifications to the 2020 Order, which would strengthen existing obligations and prohibit Meta from monetizing minors’ data except for limited, security-related purposes. This delay is indefensible as Meta escalates its commercial surveillance practices.By failing to finalize these safeguards, the Commission leaves children and teenagers—those most at risk of manipulation through AI-driven advertising—without meaningful protection. It also signals to Meta and the broader industry that there are no enforceable limits on the use of conversational data, AI-derived inferences, or other sensitive digital interactions. This passivity undermines the Commission’s credibility and exposes consumers to escalating risks as generative AI expands the scope and scale of commercial surveillance.The FTC Must Initiate a Section 5 Investigation and Meta Must Suspend ImplementationEven beyond the consent decrees, Meta’s proposal appears to constitute an unfair or deceptive practice in violation of Section 5 of the FTC Act.· Deceptive practice: Consumers reasonably expect that private chats and conversational interactions with digital assistants will not be monetized for advertising unless explicitly and clearly disclosed. Meta’s plan to use chatbot interactions for ad targeting and personalization, without prior clear disclosure and opt-in consent, is a material omission or misrepresentation likely to mislead consumers.· Unfair practice: The use of private conversational data for advertising imposes a substantial injury that consumers cannot reasonably avoid, and which is not outweighed by countervailing benefits. Harms include loss of privacy, the chilling of free expression, discrimination via algorithmic profiling, and reputational or psychological risk. Minors, in particular, may be harmed by personalized social media feeds whose content is optimized for engagement, producing a variety of downstream harms such as exposure to harmful content, reinforcement of unhealthy behaviors, and adverse effects on mental health.The Commission should open a Section 5 investigation and direct Meta to suspend implementation of this practice unless and until the Commission has completed a public investigation and determined that it complies with federal law.FTC Leadership and Decisive Action Needed NowThe FTC has repeatedly affirmed that the deployment of artificial intelligence does not exempt firms from existing consumer protection obligations. Yet Meta’s plan to repurpose conversational AI data for advertising illustrates the dangers of regulatory inaction: absent intervention, the practice will normalize a level of surveillance that is qualitatively more intrusive than traditional behavioral tracking.Conversational data is uniquely revealing, capturing intimate details of users’ personal lives, relationships, health, and beliefs. It therefore warrants heightened scrutiny under both the consent decrees and Section 5 of the FTC Act. At a minimum, this category of data should be treated as sensitive information requiring affirmative, opt-in consent from adults (and a categorical prohibition on monetization of youth data) before it can be used for advertising. Failing to impose this standard would effectively permit firms to unilaterally exploit the most private forms of digital interaction for commercial gain.Requests for ReliefAccordingly, we respectfully but urgently request that the Commission do the following:1. Publicly confirm whether it has reviewed Meta’s privacy and security risk assessments of Meta’s proposed new practice to use AI chat personal data for ad personalization and targeting. The FTC must address whether Meta has engaged in any misrepresentations regarding these new practices. The Commission must release its findings to the public.2. Initiate a formal Section 5 investigation into Meta’s unfair and deceptive use of chatbot data for advertising and content personalization without informed, opt-in consent.3. Require Meta to suspend implementation of this practice (slated to go into effect December 16, 2025) pending completion of the Commission’s investigations, public disclosure of findings, and approval under both the FTC’s consent-decree oversight and enforcement authority.4. Immediately resume and finalize the 2023 proposed amendments to the 2020 Order, particularly those prohibiting monetization of minors’ data and other high-risk data types, without further delay.5. Issue public guidance reaffirming that firms are not exempt from privacy obligations under the FTC Act when they use AI and generative AI and clarifying that conversational interaction data is presumptively sensitive, warranting opt-in consent for adults and categorical prohibition for minors.The Commission has both the authority and the responsibility to act. Failure to do so will permit Meta to proceed unchecked and embolden other companies to adopt similarly invasive AI-driven practices, eroding consumer trust and exposing millions of Americans, especially children and vulnerable communities, to unbounded digital exploitation.[13]We urge you to act without delay. If you would like to discuss further, please contact Jeff Chester (jeff@democraticmedia.org) or John Davisson (davisson@epic.org).Respectfully,Center for Digital DemocracyElectronic Privacy Information Center (EPIC)20/20 Vision5Rights FoundationArkansas Community OrganizationsBecca Schmill FoundationBlue RisingCenter for Economic IntegrityCenter for Oil & Gas OrganizingCheck My Ads InstituteChildren and Screens: Institute of Digital Media and Childhood DevelopmentChildren NowCommon Sense MediaConsumer ActionConsumer Federation of AmericaDemand Progress Education FundEquality New MexicoFairplayIndigenous Women RisingInvestor Alliance for Human RightsLynn’s WarriorsMossville Environmental Action Now (MEAN)Mothers Against Media Addiction (MAMA)National Association of Consumer AdvocatesNew Jersey Appleseed Public Interest Law CenterOpen Media and Information Companies Initiative (Open MIC)Oregon Consumer JusticeOregon Consumer LeagueParentsSOSParentsTogether ActionPublic CitizenSuicide Awareness Voices of Education (SAVE)Tech Justice Law ProjectTech Oversight ProjectUltraVioletVirginia Citizens Consumer Council[1] Improving Your Recommendations on Our Apps With AI at Meta, Meta (Oct. 1, 2025), https://about.fb.com/news/2025/10/improving-your-recommendations-apps-ai-meta/.[2] Jacob Bourne, GenAI User Forecast 2025, eMarketer (July 22, 2025), https://content-na1.emarketer.com/genai-user-forecast-2025.[3] Id.[4] Jeremy Goldman, Instagram, Facebook Help Meta Gain Share of US Social Ad Spending for First Time Since 2018, eMarketer (Oct. 13, 2025), https://content-na1.emarketer.com/meta-reclaims-ad-spending-momentum-even-reddit-breaks.[5] WARC, What We Know About Marketing on Facebook (Aug. 2025), www.warc.com/SubscriberContent/article/bestprac/what-we-know-about-marketing-on-facebook/en-gb/110336.[6] Id.[7] Bourne, supra note 2.[8] Jacob Bourne, AI, Consumer Behavior, and the Trust Economy, eMarketer (Oct. 20, 2025), https://content-na1.emarketer.com/ai-consumer-behavior-trust-economy.[9] Modified Decision and Order, In the Matter of Facebook, Inc., C-4365 (FTC Apr. 27, 2020), www.ftc.gov/system/files/documents/cases/c4365facebookmodifyingorder.pdf.[10] Empirical research, teen surveys, and litigation show that users often disclose highly sensitive personal information to AI chatbots, including names, locations, secrets, mental health crises, and sexual preferences. Design choices (friend-like personas, nudges, and non-judgmental tones) help explain why generative chatbots elicit such intimate disclosures. See Common Sense Media, Talk, Trust & Trade-Offs: How and Why Teens Use AI Companions (July 16, 2025), www.commonsensemedia.org/research/talk-trust-and-trade-offs-how-and-why-teens-use-ai-companions; see also Kate Payne, An AI Chatbot Pushed a Teen to Kill Himself, a Lawsuit Alleges, Associated Press (Oct. 25, 2024), www.apnews.com/article/chatbot-ai-lawsuit-suicide-teen-artificial-intelligence-9d48adc572100822fdbc3c90d1456bd0; Niloofar Mireshghallah et al., Trust No Bot: Discovering Personal Disclosures in Human-LLM Conversations in the Wild, arXiv e-prints (July 2024), https://arxiv.org/abs/2407.11438; Naomi Nix & Nitasha Tiku, Meta AI Users Confide on Sex, God and Trump. Some Don’t Know It’s Public, Washington Post (June 13, 2025), www.washingtonpost.com/technology/2025/06/13/meta-ai-privacy-users-chatbot/.The FTC recently noted that chatbots “are designed to communicate like a friend or confidant, which may prompt some users, especially children and teens, to trust and form relationships with chatbots.” Federal Trade Commission, FTC Launches Inquiry into AI Chatbots Acting as Companions (Sept. 2025), www.ftc.gov/news-events/news/press-releases/2025/09/ftc-launches-inquiry-ai-chatbots-acting-companions.[11] See Modified Decision and Order, In the Matter of Facebook, Inc., C-4365, at 5 (FTC Apr. 27, 2020), www.ftc.gov/system/files/documents/cases/c4365facebookmodifyingorder.pdf.[12] Meta announced the launch of its AI assistants for consumers on September 27, 2023, accompanied by the post “Privacy Matters: Meta’s Generative AI Features.” See Mike Clark, Privacy Matters: Meta’s Generative AI Features, Meta (Sep. 27, 2023), https://about.fb.com/news/2023/09/privacy-matters-metas-generative-ai-features/. Public statements by Meta about its use of chatbot or AI-assistant interaction data from U.S. users—as distinct from publicly available posts or shared content—are difficult to identify with precision. A disclosure on “artificial intelligence technology” linked to Meta’s June 26, 2024 privacy policy, however, states: “This is why we draw from publicly available and licensed sources, as well as information people have shared on Meta Products, including interactions with AI at Meta features. We keep training data for as long as we need it on a case-by-case basis to ensure an AI model is operating appropriately, safely and efficiently.” Meta, How Meta Uses Information for Generative AI Models and Features (last visited Oct. 29. 2025), https://www.facebook.com/privacy/genai.This and subsequent corporate disclosures, see, e.g., Meta, Meta’s AI Products Just Got Smarter and More Useful (Sep. 25, 2024), https://about.fb.com/news/2024/09/metas-ai-product-news-connect/; Meta, Building Toward a Smarter, More Personalized Assistant (Jan. 27, 2025), https://about.fb.com/news/2025/01/building-toward-a-smarter-more-personalized-assistant/, reference the use of chatbot interaction data for training but do not identify advertising or content personalization as a purpose. Meta did not announce such uses until October 1, 2025, when it stated that chatbot interactions would be leveraged for ad targeting and content personalization beginning December 16, 2025.[13] Laurie Sullivan, OpenAI Browser Keeps Search, Adds Memory Benefiting Advertisers, MediaPost (Oct. 21, 2025), www.mediapost.com/publications/article/410064/openai-browser-keeps-search-adds-memory-benefitin.html.

-

Press Release

Advocates Urge FTC to Halt Meta’s Plan to Use AI Chatbot Data for Ads

Meta’s chatbot data grab risks normalizing surveillance-driven marketing across the industry, setting a dangerous precedent for privacy and consumer protection.

Contact: Jeff Chester, 202-494-7100 Jeff@democraticmedia.org John Davisson, 202-483-1140 davisson@epic.orgAdvocates Urge FTC to Halt Meta’s Plan to Use AI Chatbot Data for Ads Meta’s chatbot data grab risks normalizing surveillance-driven marketing across the industry, setting a dangerous precedent for privacy and consumer protection.Washington, D.C. A coalition of 36 privacy, consumer protection, children’s rights, and civil rights advocates and researchers today called on the Federal Trade Commission (FTC) to investigate and halt Meta’s recently announced plan to use conversations with its AI chatbots for advertising and content personalization. The letter, sent to FTC Chair Andrew Ferguson and Commissioners, urges the agency to exercise its oversight authority and act under both Meta’s existing consent decree and Section 5 of the FTC Act to stop this practice from moving forward.On October 1, 2025, Meta announced that beginning December 16 it would use chatbot interactions on Facebook, Instagram, and WhatsApp to inform ad targeting and personalization. These conversations often contain highly sensitive disclosures - including health, relationship, and mental health information - yet Meta has provided no opt-in consent mechanism and no assurances of heightened privacy or security safeguards.In the letter, the coalition calls on the FTC to:Enforce Meta’s existing consent decrees and require disclosure of risk assessments;Treat the practice as an unfair and deceptive act under Section 5 of the FTC Act;Suspend Meta’s chatbot advertising program pending Commission review;Finalize the long-pending modifications to the 2020 order to strengthen privacy protections, including a proposed prohibition to monetize minors’ data.The groups are also urging the Commission to disclose its findings publicly.The coalition emphasizes that Meta’s initiative is not a marginal product feature but part of a deliberate strategy to expand surveillance-driven marketing. Without FTC intervention, they warn, Meta’s actions will normalize invasive AI data practices across the industry, further undermining consumer privacy and protection.Quotes“The FTC cannot stand by while Meta and its peers rewrite the rules of privacy and consumer protection in the AI era. Chatbot surveillance for ad targeting is not a distant threat—it is happening now. Meta’s move will accelerate a race in which other companies are already implementing similarly invasive and manipulative practices, embedding commercial surveillance deeper into every aspect of our lives.” Katharina Kopp, Deputy Director, Center for Digital Democracy (CDD)“The FTC has a sordid history of letting Meta off the hook, and this is where it’s gotten us: industrial-scale privacy abuses brought to you by a chatbot that pretends to be your friend,” said John Davisson, Director of Litigation for EPIC. “And where is the Trump-Ferguson FTC? Slow-walking a critical enforcement action brought under Chair Khan to protect minors from Meta’s exploitative data practices. Meta’s appalling chatbot scheme should be a wake-up call to the Commission. It’s time to get serious about reining in Meta.” SignatoriesThe 36 organizations that signed to the letter include, among others: Center for Digital Democracy; Electronic Privacy Information Center; Public Citizen; Demand Progress Education Fund; ParentsTogether Action; Becca Schmill Foundation; Center for Economic Integrity; Fairplay; National Association of Consumer Advocates; Consumer Federation of America; 5Rights Foundation; Mothers Against Media Addiction (MAMA); and Common Sense Media. * * *The Center for Digital Democracy is a Washington, D.C.-based public interest research and advocacy organization, working on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice.The Electronic Privacy Information Center (EPIC) is a is a 501(c)(3) non-profit established in 1994 to protect privacy, freedom of expression, and democratic values in the information age through advocacy, research, and litigation. For more than 30 years, EPIC has fought for robust safeguards to protect personal information. -

The transfer of TikTok’s United States operation to a group of Trump administration-selected controlling investors should be vigorously opposed, as it would effectively endorse the current extractive system shaping our social media platforms and most other commercial digital services.TikTok orchestrates its social media marketing business in the same ways that Meta and Google do — harvesting our data, leveraging the power of the now ubiquitous generative artificial intelligence products, closely partnering with the most powerful global brands, ad agencies and adtech companies and enabling an array of human and virtual influencers — all to generate the mechanisms of “engagement” that are the core of the commercial surveillance monetization model for our digital media.How are we ever to reform our country’s current digital media giants — as well as the AI behemoths lined up to become new, ad-saturated marketplaces — if our government uncritically sanctions the current business model?A US TikTok should be required, at the very least, to operate its data practices in ways that really protect privacy, shield users from manipulative and unfair marketing tactics, adopt accountable generative AI applications, and serve in ways that strengthen our democracy, including by promoting civic discourse, civil rights and diverse viewpoints.While such proposals may appear to be digital “pie in the sky” aspirations at this perilous political moment, the transfer of TikTok’s US ownership and its connections to recent and pending mega-media mergers is precisely the moment to make our views known and put up a fight.Of course, the debate over TikTok here has focused on owner ByteDance’s relationship with China and the concern that its autocratic government will surveil and target Americans.While that’s a legitimate issue, Google, Meta, Amazon and many other digital media companies are as serious a threat to us personally and collectively. They are all conducting far-reaching data surveillance on Americans (and people worldwide), unleashing algorithms and online content designed to profoundly influence what we think, do and consume. Disinformation on these platforms is not only abundant but supported. As we saw with the Cambridge Analytica privacy scandal and elections in the US and in Europe, US-based platforms can easily be manipulated by foreign and other actors. There are still endless headlines about the latest social media-inspired tragedy — from individual acts leading to suicide and violence to the promotion of hate and even genocide — which sadly illustrate why our digital media system is in desperate need of transformative change.The Trump administration’s unleashing of generative AI technologies, which are now used by our platforms to accelerate and deepen the impact of their commercial surveillance marketing, is hurdling our digital media system to gather and analyze even more information on us and more efficiently deploy it to better sell, influence and direct our behaviors.There is another looming problem with US President Donald Trump’s TikTok plan — the expansion of MAGA-influenced media control over that platform, as well as leading news services, streaming video channels, broadcast stations and media production studios. In particular, this would be the likely role played by Trump ally and the billionaire chair of Oracle Larrry Ellison. Transferring key TikTok assets to be managed by Oracle is key to the White House’s plans.As we know, Ellison’s deep pockets enabled his son David recently to acquire Paramount/CBS. There have already been a number of troubling concessions to the Trump administration to get the deal through, including installing a politically conservative ombudsman at CBS News, acquiring the Free Press website and tapping its founder Bari Weiss to lead CBS’s newsroom.Next on the agenda are the Ellisons’ buying Warner/Discovery/CNN. Their potential control over two major news operations — which would likely see layoffs and staff purges — could provide Trump and his allies greater ability to shape the information narrative to their benefit. A combined Paramount/Warner would also provide the Ellisons’ a slew of streaming video, cable and broadcast channels (including sports and animation), film production facilities, adtech operations and more. Imagine all these media properties, including TikTok, acting synchronously, supporting a MAGA agenda that leverages the massive data gathering and targeting power of contemporary digital platforms to reach individuals and influence their political perspectives.Given Oracle’s considerable business interest and expertise in digital data used for marketing, its “oversight” of a US TikTok algorithm will not lead to better privacy and consumer protections for Americans. Ellison’s Oracle has long provided a host of data tools for digital marketing, and has also added numerous generative AI tools to advance it. The company touts its ability, for example, “to create a connected and personalized experience,” including by combining “online, offline and third-party data with AI and machine learning.” According to reports, ByteDance will still control TikTok’s e-commerce and advertising business in the US. This means that the numerous global and US-based advertisers who have found significant success exploiting TikTok will continue to operate in a business-as-usual manner, able to purchase advertising and influence relatively seamlessly across the globe. Given that access to data is central for this key revenue generator, how exactly will Oracle and ByteDance work together to keep TikTok’s more than $16 billion US revenues flowing? This question demands answers now.There are an array of policy issues that can be used to challenge Trump’s TikTok scheme, helping publicize the need to reforming US digital media and also potentially slow down the final transfer of ownership, including numerous data privacy and consumer protection issues. The Ellisons’ takeover of Paramount/CBS also involves significant data gathering by its streaming video channels, for example. The antitrust implications should be reviewed to address the impact on competition involving TikTok, Paramount/CBS, Warner/Discovery/CNN, Oracle and its many clients.There should be challenges filed at the Federal Trade Commission, Department of Justice and state attorneys general, as well as public hearings on what this means for consumers, creators, young people, journalism and the institutions supporting democratic discourse.A raft of other questions should be raised, including about the proposed set of TikTok’s owners and their own involvement with China and other potential conflicts. For example, Oracle has recently brokered a “gigantic contract” with Chinese-ecommerce company Temu. It is also profiting from serving as TikTok’s cloud computing provider. However, the company is currently experiencing a cash flow problem, according to Bloomberg, and has announced layoffs, raising questions about whether Trump’s TikTok deal is also designed to financially boost an ally.Oracle’s recent expanded alliance with Google to offer that company’s most advanced Gemini AI services on its cloud illustrates the web of digital partnerships that undermine competition and increase the prospects of greater data harvesting. Silver Lake Partners, one of the other proposed US investors, has invested in numerous Chinese companies, including one accused of facilitating surveillance on the ethnic Uyghur community there. Abu Dhabi-based MGX, an AI-focused investment company that is being rewarded a US TikTok ownership role and is supporting Trump’s “Stargate” AI initiative here, has a range of financial relationships, including with Elon Musk and OpenAI, which require scrutiny as well.I was among the digital rights advocates working in Washington, DC, in the very early 1990s as the world wide web became ad supported and commercialized. Our NGO (then called the Center for Media Education) was one of the very few to oppose the role that data tracking would play in delivering targeted marketing. Industry lobbyists and their political allies were successful in making sure there were no impediments to extensive data-mining online — which is why today we still don’t have a federal consumer privacy law.If the internet has an “original sin,” it was allowing the major platforms, their brand advertiser and data-broker partners successfully to transform the internet into a highly centralized and powerful apparatus designed to place the interests of marketers and their oligopolistic platform collaborators ahead of the needs of a civil and equitable society.We are at a critical moment today — just as important as those early days. Generative AI has been rapidly adopted across our digital, industrial and marketing sectors. It is accelerating the capabilities of the commercial surveillance model and, through partnerships and alliances, allowing our current leading online giants and a few newcomers to be among the dominant players.The proposal to allow Trump’s political allies to operate TikTok in the US, without significant reforms and safeguards, as well as likely permitting a MAGA media industry trifecta provides a much-needed opportunity to articulate an alternative vision for US digital media. One reason we have ended up with the current crisis, I believe, has been the historic and collective failure to articulate and support communications policies that would have helped our democracy better thrive. With Trump’s September executive order giving the “divestiture” 120 days to finalize, now is the time to use all our resources — including on TikTok and other social media — to offer a better and alternative outcome.Originally published by Tech Policy Press, October 10, 2025

-

Press Release

CDD Joins Coalition of Child Advocates Urging Senate E&C Committee Members to Advance COPPA 2.0

Letter

June 23, 2025Dear Chairman Cruz, Ranking Member Cantwell, and Members of the Committee,Today we write as a coalition of advocates dedicated to the mental and physical health, privacy, safety, and education of our nation’s youth, urging you to advance the Children and Teens’ Online Privacy Protection Act (S. 836), also known as COPPA 2.0. The children’s data privacy protection law is long outdated. Now, with new and even more powerful technologies, like AI, proliferating, and unscrupulous companies collecting ever more personal information from young people, it is more imperative than ever that Congress prioritize privacy protections for children and teens by updating the decades-old Children’s Online Privacy Protection Act (COPPA) and passing COPPA 2.0 out of your Committee.COPPA 2.0 is an effective, widely supported, bipartisan update to its 25-year-old predecessor. And the Senate overwhelmingly approved this bill once already, by a vote of 91-3 in July 2024 as part of the Kids Online Safety and Privacy Act.We appreciate the recent rule-making efforts of the FTC to update COPPA. However, certain updates–such as adding protections for teenagers 13 and over–can only be made by Congress, and thus COPPA 2.0 is still desperately needed. COPPA 2.0 extends privacy protections to teens, implements strong data minimization principles, bans targeted advertising to covered minors, gives families greater control over their data, and strengthens the law to ensure covered entities cannot evade enforcement. American families urgently need these protections. Big Tech’s business model relies on the extraction of millions of data points on children and teens, all to rake in record profi ts through design features that maximize engagement and models that interpret youth’s emotional states in order to make more money off of highly targeted ads.The use of targeted advertising results in kids being shown ads for alcohol, tobacco, diet pills, and gambling sites–and there is a growing understanding that platforms use highly detailed information about young users to target them with these inappropriate ads at the moment they are feeling insecure or emotionally vulnerable; precisely the moment at which they are most susceptible.These privacy risks and harms are multiplied because social media platforms are designed to be addictive, so that kids will spend more time online–giving companies more opportunities to take out more information about young users, so they can even better target kids and their familieswith marketing messages, and target kids more often. As the former U.S. Surgeon General has advised, this misuse of young people’s personal information has contributed to a startling mental health crisis among our youth, along with a myriad of online harms, including sexual exploitation, rampant cyberbullying, and eating disorders. The data-driven business model, which is being exacerbated by AI, is directly at odds with the health, safety, and privacy of our nation’s children and teens, and Congress must act to put new safeguards in place.COPPA 2.0 is a critical piece of the puzzle to protect children and teens. America’s youth and families cannot wait any longer. With our utmost respect, we ask that you move COPPA 2.0 forward.Sincerely,American Academy of PediatricsCenter for Digital DemocracyCommon Sense MediaFairplay -

Press Release

WARNING: POSSIBLE HOSTILE TAKEOVER OF FTC BY “DOGE” TEAM UNDER ELON MUSK — CONSUMER PROTECTIONS AT IMMEDIATE RISK

FOR IMMEDIATE RELEASEApril 4, 2025WARNING: POSSIBLE HOSTILE TAKEOVER OF FTC BY “DOGE” TEAM UNDER ELON MUSK — CONSUMER PROTECTIONS AT IMMEDIATE RISKWASHINGTON, D.C. — Alarming reports suggest that individuals associated with the so-called “DOGE team,” acting under the direction of Elon Musk, may be maneuvering to infiltrate and dismantle the Federal Trade Commission (FTC) from within. Sources indicate that DOGE staffers have already embedded themselves inside the agency, potentially with access to sensitive consumer data and a vast trove of corporate trade secrets housed within internal data systems. This setup may be laying the groundwork for illegal mass firings and a reduction in force—an unprecedented power grab that could cripple the FTC’s ability to protect the American public.While details continue to emerge, the pattern is unmistakable. The DOGE team’s recent actions follow a disturbing playbook: bypassing norms, subverting legal processes, and setting the stage for institutional collapse. And there is at least one clear beneficiary—Elon Musk, whose company X (formerly Twitter) remains under a federal consent decree. A neutered FTC would eliminate one of the final safeguards standing between Musk and unchecked surveillance, exploitation, and algorithmic manipulation of users—including children.The very foundation of federal consumer protection is in danger.The FTC plays an irreplaceable role in:● Shielding seniors and veterans from fraud● Defending children’s safety and privacy online● Blocking deceptive advertising and marketing practices● Enforcing limits on monopolistic behavior and AI-driven risks Without a fully functioning FTC, corporations would face fewer consequences, and consumers would be left vulnerable to lawless business practices. This would open the floodgates to a “race to the bottom” economy—one where exploitation, manipulation, and impunity replace fair play, innovation, and trust. The consequences would be dire—especially for children, vulnerable populations, and small businesses.We urge Chair Ferguson and Commissioner Holyoak to act now. Stand firm against the DOGE team’s attempted subversion. Uphold your oaths to the institution—and to the Constitution.The American public deserves a functioning watchdog—not a hollowed-out institution hijacked by billionaires seeking to rewrite the rules in their favor. ***The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of the Children's Online Privacy Protection Act over 25 years ago in 1998. -

Press Release

CDD Joins Twenty-Four Other Consumer Protection and Digital Rights Groups in Condemning the Illegal Firing of Two Democratic FTC Commissioners

Groups are urging lawmakers to launch a probe into the recent firings and to block the confirmation of President Donald Trump’s FTC nominee until the fired commissioners get their jobs back.Read full letter attached. -

Press Release

In Firing The Two Democratic FTC Commissioners, Pres. Trump Places Every American Consumer At Grave Risk

Statement of Jeff Chester, executive director, Center for Digital DemocracyMarch 19, 2025 In a country where illegality is quickly replacing the rule of law, the action yesterday by Pres. Trump ending the decades of bi-partisan operations at the Federal Trade Commission (FTC) may seem insignificant. But for anyone concerned about lower grocery prices, protecting privacy online, consumer protections for your money when buying goods and services, or whether children and teens can use the Internet without mass surveillance and manipulation, they should be very, very worried. Why did Pres. Trump do this now? It’s because the U.S. is on the verge of a transformation of how we shop, buy, view and interact with businesses online and offline—due to the confluence of Generative Artificial Intelligence, expansion of pervasive data gathering and personalized profiling, and the deepening of alliances and partnerships across Big Tech, Big Data, retailers, supermarkets, hospitals, databrokers, streaming TV networks and many others. Trump’s allies—especially Amazon, Google, Meta/Facebook, and TikTok—do not want any safeguards, oversight or accountability—especially at this time when immense profits are to be made for themselves. The Trump Administration is rewarding the very same billionaires and Tech Giants who are responsible for raising the prices we pay for groceries, obliterating our data privacy, and making it unsafe for kids to go on social media. Consumer advocates won’t be silent here, of course, and we will document and sound the alarm as Americans are increasingly victimized by Big Tech and large supermarket chains. The FTC has been transformed into a Trump Administration and special interest lapdog—instead of the federal watchdog guarding the interests and welfare of the public. Americans who struggle to make ends meet—whether they need help securing refunds, recovering from scams, or ensuring fair treatment—will be especially harmed. But let’s be clear. The action of the Trump Administration to remove the FTC as a serious consumer protection agency has helped make every American further unsafe. --30-- -

Press Release

Statement on the Reintroduction of the Children and Teens’ Online Privacy Protection Act (COPPA 2.0) by Senators Markey and Cassidy

March 4, 2025Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.orgStatement on the Reintroduction of the Children and Teens’ Online Privacy Protection Act (COPPA 2.0) by Senators Markey and Cassidy. The following statement is attributed to Katharina Kopp, Ph.D., Deputy Director of the Center for Digital Democracy:“The Children and Teens’ Online Privacy Protection Act, reintroduced by Senators Markey and Cassidy and other Senate co-sponsors, is more urgent than ever. Children’s surveillance has only intensified across social media, gaming, and virtual spaces, where companies harvest data to track, profile, and manipulate young users. COPPA 2.0 will ban targeted ads to those under 16, curbing the exploitation, manipulation, and discrimination of children for profit. By extending protections to teens and requiring a simple ‘eraser button’ to delete personal data, this legislation takes a critical step in restoring privacy rights in an increasingly invasive digital world,” said Katharina Kopp, Deputy Director of the Center for Digital Democracy. See also the full statement from Senators Markey and Cassidy here. -

Regulating Digital Food and Beverage Marketing in the Artificial Intelligence & Surveillance Advertising Era Ultra-processed food companies and their retail, online-platform, quick-service-restaurant, media-network and advertising-technology (adtech) partners are expanding their targeting operations to push the consumption of unhealthy foods and beverages. A powerful array of personalized, data-driven and AI-generated digital food marketing is pervasive online, and also designed to influence us offline as well (such as when we are at convenience or grocery stores). CDD has a number of reports that reveal the extent of this development, including an analysis of the market, ways to research, and where policies and safeguards have been enacted. Unfortunately, there isn’t a single remedy to address such unhealthy marketing. Individuals and families can only do so much to reduce the effects of today’s pervasive tracking and targeting of people and communities via mobile phones, social media, and “smart” TVs. What’s required now is a coordinated set of policies and regulations to govern the ways ultra processed food companies and their allies conduct online advertising and data collection, especially when public health is involved. Such an effort, moreover, must be broad-based, addressing a variety of sectors, such as privacy, consumer protection, and antitrust. Formulating and advancing these policies will be an enormous challenge, but it is one that we cannot afford to ignore. CDD is working to address all of these issues and more. We closely follow the digital marketplace, especially from the food, beverage, retail and online-platform industries. We track, analyze and call attention to harmful industry practices, and are helping to build a stronger global movement of advocates dedicated to protecting all of us from this unfair and currently out-of-control system. We are happy to work with you to ensure everyone—in the U.S. and worldwide—can live healthier lives without being constantly exposed to fast-food and other harmful marketing designed to increase corporate bottom lines without regard to the human and environmental consequences.

-

Press Release

Statement on the Federal Trade Commission’s Amendments to the Children’s Online Privacy Protection Rule

January 16, 2025Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.org Statement on the Federal Trade Commission’s Amendments to the Children’s Online Privacy Protection Rule The following statement is attributed to Katharina Kopp, Ph.D., Deputy Director of the Center for Digital Democracy:As digital media becomes increasingly embedded in children’s lives, it more aggressively surveils, manipulates, discriminates, exploits, and harms them. Families and caregivers know all too well the impossible task of keeping children safe online. Strong privacy protections are critical to ensuring their well-being and safety. The Federal Trade Commission’s (FTC) finalized amendments to the Children’s Online Privacy Protection Rule (COPPA Rule) are a crucial step forward, enhancing safeguards and shifting the responsibility for privacy protections from parents to service providers. Key updates include:Restrictions on hyper-personalized data collection for targeted advertising:Mandating separate parental consent for disclosing personal information to third parties.Prohibiting the conditioning of service access on such consent.Limits on data retention:Imposing stricter data retention limits.Baseline and default privacy protections:Strengthening purpose specification and disclosure requirements.Enhanced data security requirements:Requiring robust information security programs.We commend the FTC, under Chair Lina Khan’s leadership, for finalizing this much-needed update to the COPPA Rule. The last revision, in 2013, was over a decade ago. Since then, the digital landscape has been radically transformed by practices such as mass data collection, AI-driven systems, cloud-based interconnected platforms, sentiment and facial analytics, cross-platform tracking, and manipulative, addictive design practices. These largely unregulated, Big Tech and investor driven transformations have created a hyper-surveillance environment that is especially harmful and toxic to children and teens.The data-driven, targeted advertising business model continues to pose daily threats to the health, safety, and privacy of children and their families. The FTC’s updated rule is a small but significant step toward addressing these risks, curbing harmful practices by Big Tech, and strengthening privacy protections for America’s youth online.To ensure comprehensive safeguards for children and teens in the digital world, it is essential that the incoming FTC leadership enforces the updated COPPA Rule vigorously and without delay. Additionally, it is imperative that Congress enacts further privacy protections and establishes prohibitions against harmful applications of AI technologies. * * *In 2024, a coalition of eleven leading health, privacy, consumer protection, and child rights groups filed comments at the Federal Trade Commission (FTC) offering a digital roadmap for stronger safeguards while also supporting many of the agency’s key proposals for updating its regulations implementing the bipartisan Children’s Online Privacy Protection Act (COPPA). Comments were submitted by Fairplay, the Center for Digital Democracy, the American Academy of Pediatrics, and other advocacy groups. The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago in 1998. -

Press Release

Streaming Television Industry Conducting Vast Surveillance of Viewers, Targeting Them with Manipulative AI-driven Ad Tactics, Says New Report

Digital Privacy and Consumer Protection Group Calls on FTC, FCC and California Regulators to Investigate Connected TV Practices

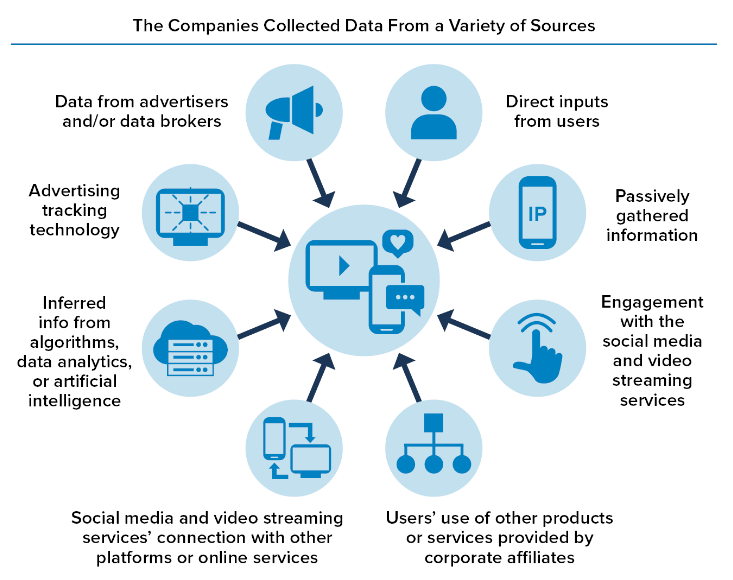

Streaming Television Industry Conducting Vast Surveillance of Viewers, Targeting Them with Manipulative AI-driven Ad Tactics, Says New Report.Digital Privacy and Consumer Protection Group Calls on FTC, FCC and California Regulators to Investigate Connected TV PracticesContact: Jeff Chester, 202-494-7100 Jeff@democraticmedia.orgOctober 7, 2024Washington, DC. The Connected TV (CTV) video streaming industry in the U.S. operates a massive data-driven surveillance apparatus that has transformed the television set into a sophisticated monitoring, tracking and targeting device, according to a new report from the Center for Digital Democracy (CDD). How TV Watches Us: Commercial Surveillance in the Streaming Era documents how CTV captures and harvests information on individuals and families through a sophisticated and expansive commercial surveillance system, deliberately incorporating many of the data-gathering, monitoring, and targeting practices that have long undermined privacy and consumer protection online.The report highlights a number of recent trends that are key to understanding today’s connected TV operations:Leading streaming video programming networks, CTV device companies and “smart” TV manufacturers, allied with many of the country’s most powerful data brokers, are creating extensive digital dossiers on viewers based on a person’s identity information, viewing choices, purchasing patterns, and thousands of online and offline behaviors.So-called FAST channels (Free Advertiser-Supported TV)—such as Tubi, Pluto TV, and many others—are now ubiquitous on CTV, and a key part of the industry’s strategy to monetize viewer data and target them with sophisticated new forms of interactive marketing.Comcast/NBCU, Disney, Amazon, Roku, LG and other CTV companies operate cutting-edge advertising technologies that gather, analyze and then target consumers with ads, delivering them to households in milliseconds. CTV has unleashed a powerful arsenal of interactive advertising techniques, including virtual product placement inserted into programming and altered in real time. Generative AI enables marketers to produce thousands of instantaneous “hypertargeted variations” personalized for individual viewers. Surveillance has been built directly into television sets, with major manufacturers’ “smart TVs” deploying automatic content recognition (ACR) and other monitoring software to capture “an extensive, highly granular, and intimate amount of information that, when combined with contemporary identity technologies, enables tracking and ad targeting at the individual viewer level,” the report explains.Connected television is now integrated with online shopping services and offline retail outlets, creating a seamless commercial and entertainment culture through a number of techniques, including what the industry calls “shoppable ad formats” incorporated into programming and designed to prompt viewers to “purchase their favorite items without disrupting their viewing experience,” according to industry materials.The report profiles major players in the connected TV industry, along with the wide range of technologies they use to monitor and target viewers. For example:Comcast’s NBCUniversal division has developed its own data-driven ad-targeting system called “One Platform Total Audience.” It powers NBCU’s “streaming activation” of consumers targeted across “300 end points,” including their streaming video programming and mobile phone use. Advertisers can use the “machine learning and predictive analytics” capabilities of One Platform, including its “vast… first-party identity spine” that can be coupled with their own data sets “to better reach the consumers who matter most to brands.” NBCU’s “Identity graph houses more than 200 million individuals 18+, more than 90 million households, and more than 3,000 behavioral attributes” that can be accessed for strategic audience targeting.”The Walt Disney Company has developed a state-of the-art big-data and advertising system for its video operations, including through Disney+ and its “kids” content. Its materials promise to “leverage streaming behavior to build brand affinity and reward viewers” using tools such as the “Disney Audience Graph—consisting of millions of households, CTV and digital device IDs… continually refined and enhanced based on the numerous ways Disney connects with consumers daily.” The company claims that its ID Graph incorporates 110 million households and 260 million device IDs that can be targeted for advertising using “proprietary” and “precision” advertising categories “built from 100,000 [data] attributes.”Set manufacturer Samsung TV promises advertisers a wealth of data to reach their targets, deploying a variety of surveillance tools, including an ACR technology system that “identifies what viewers are watching on their TV on a regular basis,” and gathers data from a spectrum of channels, including “Linear TV, Linear Ads, Video Games, and Video on Demand.” It can also determine which viewers are watching television in English, Spanish, or other languages, and the specific kinds of devices that are connected to the set in each home.“The transformation of television in the digital era has taken place over the last several years largely under the radar of policymakers and the public, even as concerns about internet privacy and social media have received extensive media coverage,” the report explains. “The U.S. CTV streaming business has deliberately incorporated many of the data-surveillance marketing practices that have long undermined privacy and consumer protection in the ‘older’ online world of social media, search engines, mobile phones and video services such as YouTube.” The industry’s self-regulatory regimes are highly inadequate, the report authors argue. “Millions of Americans are being forced to accept unfair terms in order to access video programming, which threatens their privacy and may also narrow what information they access—including the quality of the content itself. Only those who can afford to pay are able to ‘opt out’ of seeing most of the ads—although much of their data will still be gathered.”The massive surveillance and targeting practices of today’s contemporary connected TV industry raise a number of concerns, the report explains. For example, during this election year, CTV has become the fastest growing medium for political ads. “Political campaigns are taking advantage of the full spectrum of ad-tech, identity, data analysis, monitoring and tracking tools deployed by major brands.” While these tools are no doubt a boon to campaigns, they also make it easy for candidates and other political actors to “run covert personalized campaigns, integrating detailed information about viewing behaviors, along with a host of additional (and often sensitive) data about a voter’s political orientations, personal interests, purchasing patterns, and emotional states. With no transparency or oversight,” the authors warn, “these practices could unleash millions of personalized, manipulative and highly targeted political ads, spread disinformation, and further exacerbate the political polarization that threatens a healthy democratic culture in the U.S.”“CTV has become a privacy nightmare for viewers,” explained report co-author Jeff Chester, who is the executive director of CDD. “It is now a core asset for the vast system of digital surveillance that shapes most of our online experiences. Not only does CTV operate in ways that are unfair to consumers, it is also putting them and their families at risk as it gathers and uses sensitive data about health, children, race and political interests,” Chester noted. “Regulation is urgently needed to protect the public from constantly expanding and unfair data collection and marketing practices,” he said, “as well as to ensure a competitive, diverse and equitable marketplace for programmers.”“Policy makers, scholars, and advocates need to pay close attention to the changes taking place in today’s 21st century television industry,” argued report co-author Kathryn C. Montgomery, Ph.D. “In addition to calling for strong consumer and privacy safeguards,” she urged, “we should seize this opportunity to re-envision the power and potential of the television medium and to create a policy framework for connected TV that will enable it to do more than serve the needs of advertisers. Our future television system in the United States should support and sustain a healthy news and information sector, promote civic engagement, and enable a diversity of creative expression to flourish.”CDD is submitting letters today to the chairs of the FTC and FCC, as well as the California Attorney General and the California Privacy Protection Agency, calling on policymakers to address the report’s findings and implement effective regulations for the CTV industry.CDD’s mission is to ensure that digital technologies serve and strengthen democratic values, institutions and processes. CDD strives to safeguard privacy and civil and human rights, as well as to advance equity, fairness, and community --30-- -

Press Release

Statement Regarding the FTC 6(b) Study on Data Practices of Social Media and Video Streaming Services

“A Look Behind the Screens Examining the Data Practices of Social Media and Video Streaming Services”

Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.org Statement Regarding the FTC 6(b) Study on Data Practices of Social Media and Video Streaming Services -“A Look Behind the Screens Examining the Data Practices of Social Media and Video Streaming Services”The following statement can be attributed to Katharina Kopp, Ph.D., Deputy Director,Center for Digital Democracy:The Center for Digital Democracy welcomes the release of the FTC’s 6(b) study on social media and video streaming providers’ data practices and it evidence-based recommendations.In 2019, Fairplay (then the Campaign for a Commercial-Free Childhood (CCFC)), the Center for Digital Democracy (CDD), and 27 other organizations, and their attorneys at Georgetown Law’s Institute for Public Representation urged the Commission to use its 6(b) authority to better understand how tech companies collect and use data from children.The report’s findings show that social media and video streaming providers’ s business model produces an insatiable hunger for data about people. These companies create a vast surveillance apparatus sweeping up personal data and creating an inescapable matrix of AI applications. These data practices lead to numerous well-documented harms, particularly for children and teens. These harms include manipulation and exploitation, loss of autonomy, discrimination, hate speech and disinformation, the undermining of democratic institutions, and most importantly, the pervasive mental health crisis among the youth.The FTC's call for comprehensive privacy legislation is crucial in curbing the harmful business model of Big Tech. We support the FTC’s recommendation to better protect teens but call, specifically, for a ban on targeted advertising to do so. We strongly agree with the FTC that companies should be prohibited from exploiting young people's personal information, weaponizing AI and algorithms against them, and using their data to foster addiction to streaming videos.That is why we urge this Congress to pass COPPA 2.0 and KOSA which will compel Big Tech companies to acknowledge the presence of children and teenagers on their platforms and uphold accountability. The responsibility for rectifying the flaws in their data-driven business model rests with Big Tech, and we express our appreciation to the FTC for highlighting this important fact. ________________The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago in 1998. -

Press Release

Statement Regarding the U.S. Senate Vote to Advance the Kids Online Safety and Privacy Act (S. 2073)

Center for Digital Democracy Washington, DC July 30, 2024 Contact: Katharina Kopp, kkopp@democraticmedia.org Statement Regarding the U.S. Senate Vote to Advance the Kids Online Safety and Privacy Act (S. 2073). The following statement can be attributed to Katharina Kopp, Ph.D., Deputy Director,Center for Digital Democracy: Today is a milestone in the decades long effort to protect America’s young people from the harmful impacts caused by the out-of-control and unregulated social and digital media business model. The Kids Online Safety and Privacy Act (KOSPA), if passed by the House and enacted into law, will safeguard children and teens from digital marketers who manipulate and employ unfair data-driven marketing tactics to closely surveil, profile, and target young people. These include an array of ever-expanding tactics that are discriminatory and unfair. The new law would protect children and teens being exposed to addictive algorithms and other harmful practices across online platforms, protecting the mental health and well-being of youth and their families. Building on the foundation of the 25-year-old Children's Online Privacy Protection Act (COPPA), KOSPA will provide protections to teens under 17. It will prohibit targeted advertising to children and teens, impose data minimization requirements, and compel companies to acknowledge the presence of young online users on their platforms and apps. The safety by design provisions will necessitate the disabling of addictive product features and the option for users to opt out of addictive algorithmic recommender systems. Companies will be obliged to prevent and mitigate harms to children, such as bullying and violence, promotion of suicide, eating disorders, and sexual exploitation. Crucially, most of these safeguards will be automatically implemented, relieving children, teens, and their families of the burden to take further action. The responsibility to rectify the worst aspects of their data-driven business model will lie squarely with Big Tech, as it should have all along. We express our gratitude for the leadership and support of Majority Leader Schumer, Chairwoman Cantwell, Ranking Member Cruz, and Senators Blumenthal, Blackburn, Markey, Cassidy, along with their staff, for making this historic moment possible in safeguarding children and teens online. Above all, we are thankful to all the parents and families who have tirelessly advocated for common-sense safeguards. We now urge the House of Representatives to act upon their return from the August recess. The time for hesitation is over. About the Center for Digital Democracy (CDD)The Center for Digital Democracy is a public interest research and advocacyorganization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago, in 1998. -

Press Release

Statement from children’s advocacy organizations regarding the House Energy and Commerce Committee Subcommittee on Innovation, Data and Commerce May 23 markup of legislation addressing kids online safety and privacy

"Children and Teens Need Effective Privacy Protections Now"“We have two reactions to the legislation the House Energy and Commerce Subcommittee on Innovation plans to consider on Thursday. On the one hand, we appreciate that the subcommittee is taking up the Kids Online Safety Act (KOSA) as a standalone bill, as we had recommended. Congressional action to make the internet safer for kids and teens is long overdue, so we welcome this step tomorrow. As the Members know, KOSA has 69 co-sponsors in the Senate, has bi-partisan support in both houses, and has overwhelming popular support across the country as demonstrated by numerous public polls and in the strong advocacy actions in the Capitol and elsewhere. “On the other hand, we are very disappointed in the privacy legislation the subcommittee will take up as it relates to children and teens. We have always maintained, and the facts are clear on this, that stronger data privacy protections for kids and teens should go hand in hand with new social media platform guardrails. The bi-partisan COPPA 2.0 (H.R. 7890), to update the 25- year-old children's privacy law, is not being considered as a standalone bill on Thursday. And further, we are very disappointed with the revised text of the American Privacy Rights Act (APRA) discussion draft as it relates to children and teen privacy. “When the APRA discussion draft was first unveiled on April 7th, we understood Committee leadership to say that the bill would need stronger youth privacy protections. More than a month later, there are no new effective protections for youth in the new draft that was released at 10:00 pm last night, compared with the original discussion draft from April. The new APRA discussion draft purports to include COPPA 2.0 (as Title II) but does not live up to the standalone COPPA 2.0 legislation introduced in the House last month, and supported on a bipartisan, bicameral basis, in terms of protections for children and teens. Furthermore, the youth provisions in APRA’s discussion draft appear impossible to implement without additional clarifications. Lastly, in addition to these highly significant shortcomings, we are also concerned that Title II of the new APRA draft may actually weaken current privacy protections for children, by limiting coverage of sites and services. “Children and teens need effective privacy protections now. We expect the Committee to do better than this, and we look forward to working with them to make sure that once and for all Congress puts kids and teens first when it comes to privacy and online safety.” Signed: Center for Digital Democracy, Common Sense Media, and Fairplay -

Blog

CDD Urges the House to Promptly Markup HR 7890 - the Children and Teens' Online Privacy Protection Act (COPPA 2.0), HR 7890

COPPA 2.0 provides a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization and a prohibition on targeted advertising – it’s not “just” about “notice and consent”

COPPA 2.0 provides a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization and a prohibition on targeted advertising – it’s not “just” about “notice and consent”We commend Rep. Walberg (R-Mich.) and Rep. Castor (D-FL) for introducing the House COPPA 2.0 companion bill. The bill enjoys strong bipartisan support in the U.S. Senate. We urge the House to promptly markup HR 7890 and pass it into law. Any delay in bringing HR 7890 to a vote would expose children, adolescents, and their families to greater harm.Learn more about the Children and Teens’ Online Privacy Protection Act here: Background:The United States is currently facing a commercial surveillance crisis. Digital giants invade our private lives, spy on our families, deploy manipulative and unfair data-driven marketing practices, and exploit our most personal information for profit. These reckless practices are leading to unacceptable invasions of privacy, discrimination, and public health harms.In particular, the United States faces a youth mental health crisis fueled, in part, by Big Tech. According to the American Academy of Pediatrics, children’s mental health has reached a national emergency status. The Center for Disease Control found that in 2021, one in three high school girls contemplated suicide, one in ten high school girls attempted suicide, and among LGBTQ+ youth, more than one in five attempted suicide. As the Surgeon General concluded in a report last year, “there are ample indicators that social media can also have a profound risk of harm to the mental health and well-being of children and adolescents.”Platforms’ data practices and their prioritization of profit over the well-being of America’s youth significantly contribute to the crisis. The lack of privacy protections for children and teens has led to a decline in young people’s well-being. Platforms rely on vast amounts of data to create detailed profiles of young individuals to target them with tailored ads. To achieve this, addictive design features are employed, keeping young users online for longer periods of time and exacerbating the youth mental health crisis. The formula is simple: more addiction equals more data and more targeted ads which translates into greater profits for Big Tech. In fact, according to a recent Harvard study, in 2022, the major Big Tech platforms earned nearly $11 billion in ad revenue from U.S. users under age 17.The Children and Teens’ Online Privacy Protection Act (COPPA 2.0) modernizes and strengthens the only online privacy law for children, the Children’s Online Privacy Protection Act (COPPA). COPPA was passed over 25 years ago and is crucially in need of an update, including the extension of safeguards to teens. Passed by the Senate out of Committee, Reps. Tim Walberg (R-MI) and Kathy Castor (D-FL) introduced COPPA 2.0 in the House in April. It’s time now for the House to act. The Children and Teens’ Online Privacy Protection Act would:- Build on COPPA and extend privacy safeguards to users who are 13 to 16 years of age;- Require strong data minimization safeguards prohibiting the excessive collection, use, and sharing of children’s and teens’ data; COPPA 2.0 would:Prohibit the collection, use or disclosure or maintenance of personal information for purposes of targeted advertising;Prohibit the collection of personal information except when the collection is consistent with the context and necessary to fulfill a transaction or service or provide a product or service requested;Prohibit the retention of personal information for longer than is reasonably necessary to fulfill a transaction or provide a service;Prohibit conditioning a child’s or teen’s participation on the child’s or teen’s disclosing more personal information than is reasonably necessary.Except for certain internal permissible operations purposes of the operator, all other data collection or disclosures require parental or teen consent.- Ban targeted advertising to children and teens;- Provide for parental or teen controls;It would provide for the right to correct or delete personal information collected from a child or teen or content or information submitted by a child or teen to a website – when technologically feasible.- Revise COPPA’s “actual knowledge” standard to close the loophole that allows covered platforms to ignore kids and teens on their site. But what about….?…Notice and Consent, doesn’t COPPA 2.0 rely on the so-called “notice and consent” approach and isn’t the consensus that this is an ineffective way to protect privacy online?No, COPPA 2.0 does not rely on “notice and consent”. It would provide a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization. It appropriately restricts companies’ ability to collect, use, and share personal information of children and teens by default. The consent mechanism is just one additional safeguard. 1. By default COPPA 2.0 would prohibitthe collection, use or disclosure or maintenance of personal information for purposes of targeted advertising to children and teens - this is a flat out ban, consent is not required.the collection of personal information except when the collection is consistent with the context of the relationship and necessary to fulfill a transaction or service or provide a product or service requested of the relationship of the child/teen with the operator.the retention of personal information for longer than is reasonably necessary to fulfill a transaction or provide a service. 2. By extending COPPA protections to teens, COPPA 2.0 would further limit data collection from children and teens as COPPA 2.0 would prohibitconditioning a child’s or teen’s participation in a game, the offering of a prize, or another activity on the child’s or teen’s disclosing more personal information than is reasonably necessary to participate in such activity. 3. Any other personal information that companies would want to collect, use, or disclose requires parental consent or the consent of a teen, except for certain internal permissible operations purposes of the operator. COPPA 2.0's data minimization provisions prevent the collection, use, disclosure, and retention of excessive data from the start. By not collecting or retaining unnecessary data, harmful, manipulative, and exploitative business practices will be prevented. The consent provision is important but plays a relatively small role in the privacy safeguards of COPPA 2.0. But what about….?…targeted advertising, don’t we need a clear ban of targeted advertising to children and teens?Yes, and COPPA 2.0 bans targeted advertising while allowing for contextual advertising.Under COPPA 2.0 it is unlawful for an operator "to collect, use, disclose to third parties, or maintain personal information of a child or teen for purposes of individual-specific advertising to children or teens (or to allow another person to collect, use, disclose, or maintain such information for such purpose).” But what about….?…the transfer of personal information to third parties?Yes, COPPA 2.0 requires verifiable consent by a parent or teen for the disclosure or transfer of personal information to a third party, except for certain internal permissible operations purposes of the operator. Verifiable consent must be obtained before collection, use, and disclosure of personal information via direct notice of the personal information collection, use, and disclosure practices of the operator. Any material changes from the original purposes also require verifiable consent.(Note that the existing COPPA rule requires that an operator gives a parent the option to consent to the collection and use of the child's personal information without consenting to the disclosure of his or her personal information to third parties under 312.5(a)(2). The FTC recently proposed to bolster this provision under the COPPA rule update.) -

Press Release

Statement Regarding House Energy & Commerce Hearing : “Legislative Solutions to Protect Kids Online and Ensure Americans’ Data Privacy Rights”